Interpreter mode on Pixel 6 featuring Simu Liu; Marvel Shang Chi

Intro: Google Live Translate (powered by Assistant Interpreter mode on Pixel 6)

Challenge + Response

Role: Design Lead for Interpreter Mode on Live Translate

XPA Teams > Android, Lens, Pixel, Speech, NLU, Assistant, Translate and many more

Launched: 2021 on Pixel 6

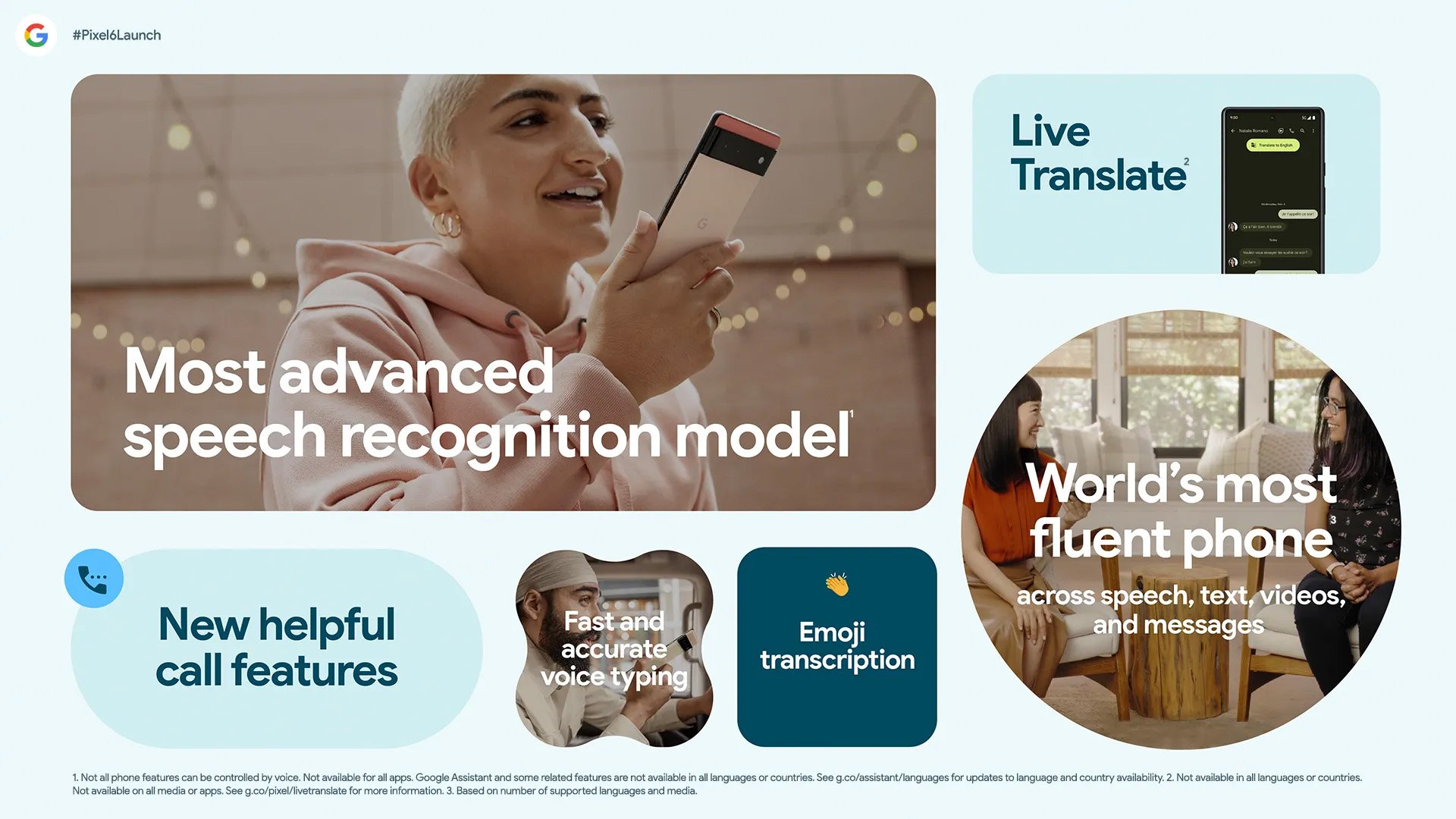

I helped lead a team of creatives and xPA initiative that developed the world’s most fluent phone and brought translation to Pixel. We were challenged to develop the creative strategy and product design for how Assistant Interpreter mode will be promoted as the hero speech translation feature on Pixel 6 along with 3 other core functions; an effort called Live Translate.

Live Translate uses on-device translation that quickly and securely adapts to users translation needs. I'm responsible for leading design and alignment to launch ‘Live Translate’ powered by Assistant interpreter mode on Pixel 6 and future surfaces.

Now you can understand family and friends who don’t all speak the same language. To show how face to face conversations can be interpreted in real time too. Live Translate feature spans other use cases that uses Pixel’s accurate speech recognition in-person and with videos so you can also get translated captions in real-time for whatever you’re watching: 📱 Watch YouTube videos in other languages 📱 Stream broadcasts and livestreams from other countries 📱 Watch social media videos from around the world The Pixel 6 Camera also has Live Translate features integrated into it, so you can hold your phone up to translate anything from a menu to a street sign in 55 languages. No apps, internet, or language courses needed.

Our Assistant Translation team collaborated cross functionally and cross product areas for over a year to develop a strategy, design thinking and vision while gathering the necessary buy-in from our senior leaders across the company. Here are some high-level metrics post launch…

18% of all Pixel 6 users have used Interpreter Mode

2X more translations on Pixel than other android devices

40% more interpreter DAU penetration on Pixel 6 vs. other android devices

📰 20+ mentions across major tech press write ups on the Pixel 6 launch, including at least 2 dedicated pieces on Live Translate

4 Global commercials launched including A 60s tv ad showcasing

🚀 Developed a brand new system-level translation feature spanning 4 modalities: messaging, media, camera and speech

Part I: The investigation begins…

The opportunity began in early 2020 as product leads and PA’s started to develop a strategy on what Live Translate should look like and the features that supported it. Assistant Interpreter Mode was part of the conversation, but did not become fully immersed and approved until 6 months before launch in 2021.

Market research revealed that Live Speech Translation is a key part of a user’s translation journey and a top requested feature from Marketing. Some of my foundational research done showed that translate features are attractive and important to users a uniquely Googley feature that is consistently highly rated among consumers.

Part II: Design

Many concepts were floated by with collaboration from other design leads already working in this space. I closely collaborated with xFN teams to build the story, define the framework and align new speech patterns cross-functionally with Android, Speech, and Translate partners. One of the biggest and complex UX problem we faced for interpreter mode was offline model management by designing new GUI and functionality that allows users to translate conversations in selected languages with no internet connection (top requested feature)

Part III: Ship It and Repeat!

As we launched our product and core feature; Live Translate, we received a lot of great press and continued follow up launches with Pixel 6a. Part of the extensive improvements we’d continued to make has been to make offline translation more powerful by simplifying the UX and adding additional languages you can use while not connected. Take a look at some press and commercials I helped to launch world-wide.