Interpreter mode on Pixel 6 featuring Simu Liu; Marvel Shang Chi

Intro: Google Assistant Interpreter Mode

Challenge + Response

Role: Design Lead for Interpreter Mode on Google Assistant

XPA Teams > Android, Lens, Pixel, Speech, NLU, Assistant, Translate and many more

Interpreter mode on Assistant is broken up into 5 categories, interpret, translate, dictionary, spelling and pronunciation. I’m responsible for driving UX vision & strategy & helping to shape cross-functional experiences that integrate Assistant’s Language & Translation vertical.

With the Google Assistant’s interpreter mode, you can now easily communicate with your customers -- even if you don’t speak the same language. Interpreter mode translates dozens of languages in real-time to help businesses have free flowing conversations with their guests at hotels, airports, customer service desks, organizations aiding humanitarian efforts and more.

Here are some highlights:

Envisioned & evangelized new product opportunities for 2021 and beyond directly related to language learning and translation user needs based on core foundational research I led.

Developed strategic OKRs (in all quarters since joining the team) in collaboration with vertical leads to align closely with Assistant goals of making Google better and building xfn partnerships that extend Assistant to surfaces.

Influenced adoption of material next patterns for core Language features such as Interpreter mode launching on Pixel 6

Crafted multiple executive narratives for VP and SVP reviews.

Our team collaborated closely with xPA’s to increase metrics and create a foundational product that can be integrated across existing and new platforms and surfaces. Here’s a look at some of the metrics I helped to accomplish:

Improved YOY growth by 100%; on 7/15/21 our team passed 10M SAI (3.2% of Assistant overall) Since I joined on 7/1/20 to lead UX I helped double SAI from 5M > 10M and QPD (Queries per day) from 6.5M > 12.5M. Additionally showcased at Google I/O; Sundar mentioned in his keynote (timestamp 13:10) referencing Assistant interpreter mode's growth

"...we're getting closer to having a universal translator in your pocket". - CEO | Google Sundar Pichai

Enhancing Interpreter mode to best the world’s’ best

Since joining the team in 2020, I helped lead the design to launch over 30 features across multiple surfaces (including smart display, mobile and voice-only) and services (Chrome, YouTube, Android and more) across the Google ecosystem. Here are a few of those launches to highlight.

Instant Actions: Interpreter mode on Search

This Search-Assistant cohesion effort focused on making translation much easier and convenient for users learning a new language or traveling abroad. Launched; 1.3M SAI per day 🎉🎉🎉 from this collaboration Which is about 28% of interpreter's total SAI, and about 14% of the verticals total SAI (which is ~9.2M)

Shaped our UX point-of-view to drive the launch of ‘Instant Actions’ (Interpreter mode on SRP; showcased at Google I/O). This Search-Assistant cohesion effort focused on making translation much easier and convenient for users learning a new language or traveling abroad.

Led design and strategy to develop a new end-to-end UX pattern to fulfill user’s translation queries and needs using contextual actions on SRP.

Crafted visual assets to support the feature product launch at Google I/O (featured at in the developer keynote)

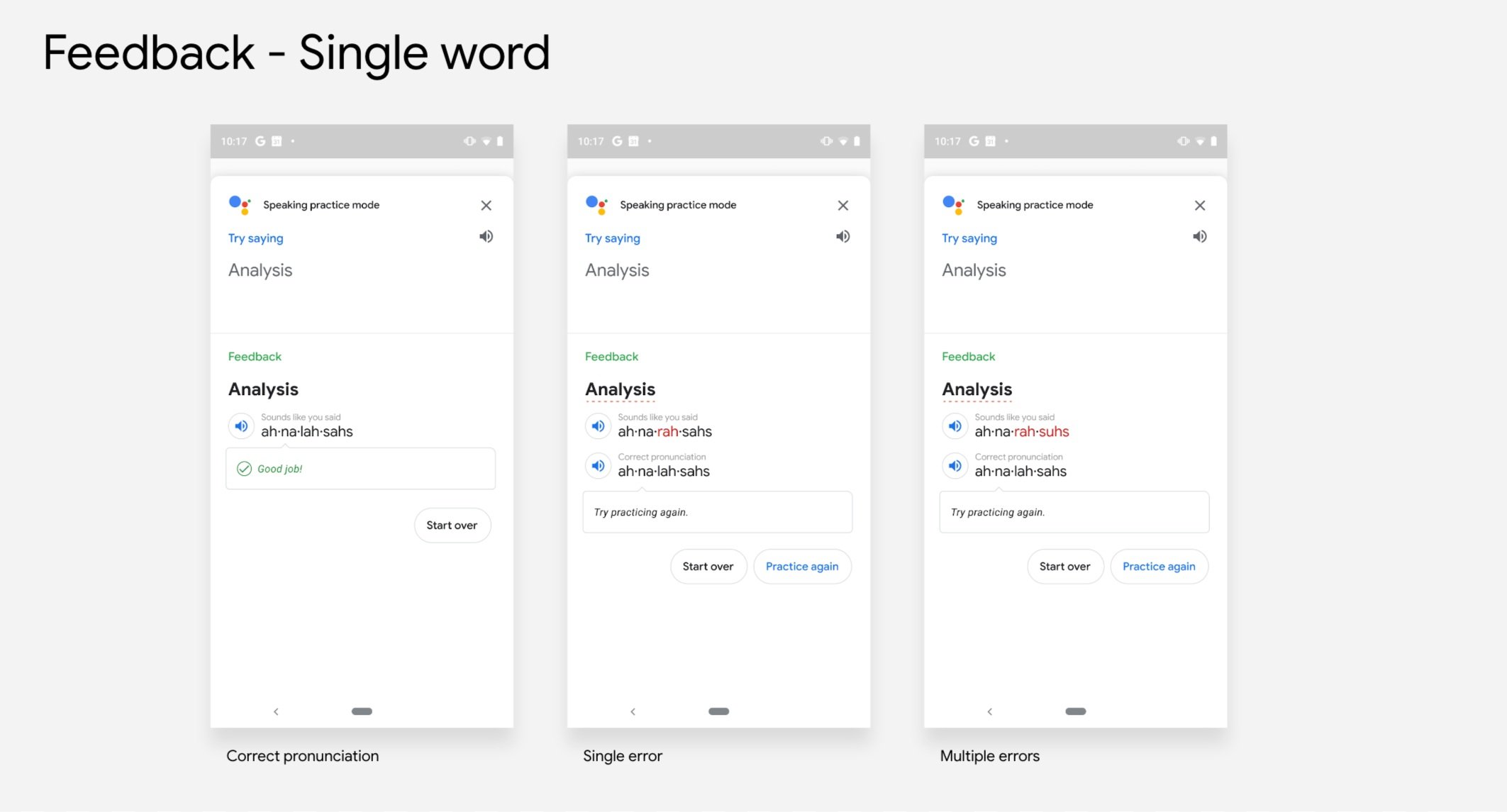

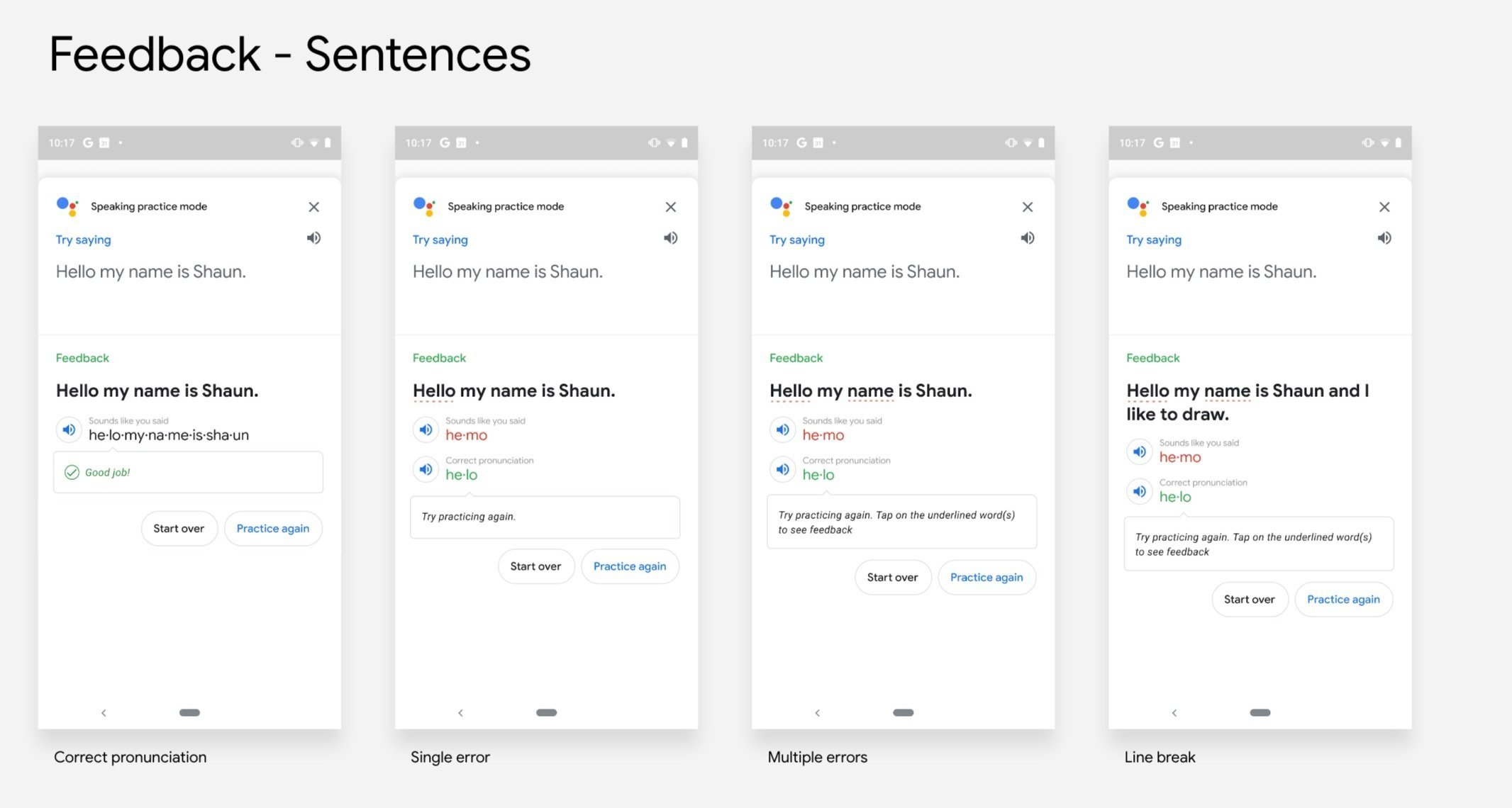

Speaking Practice

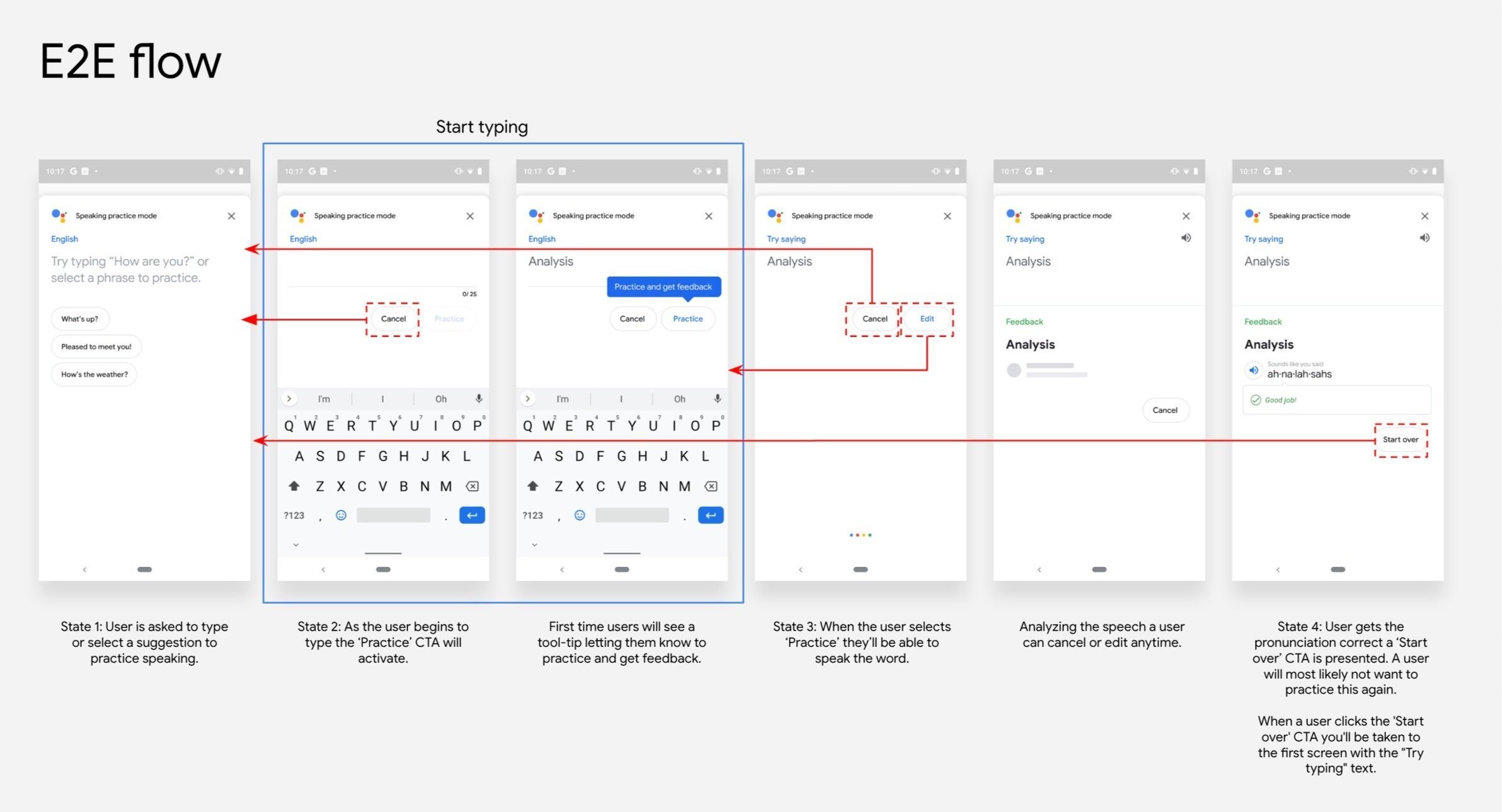

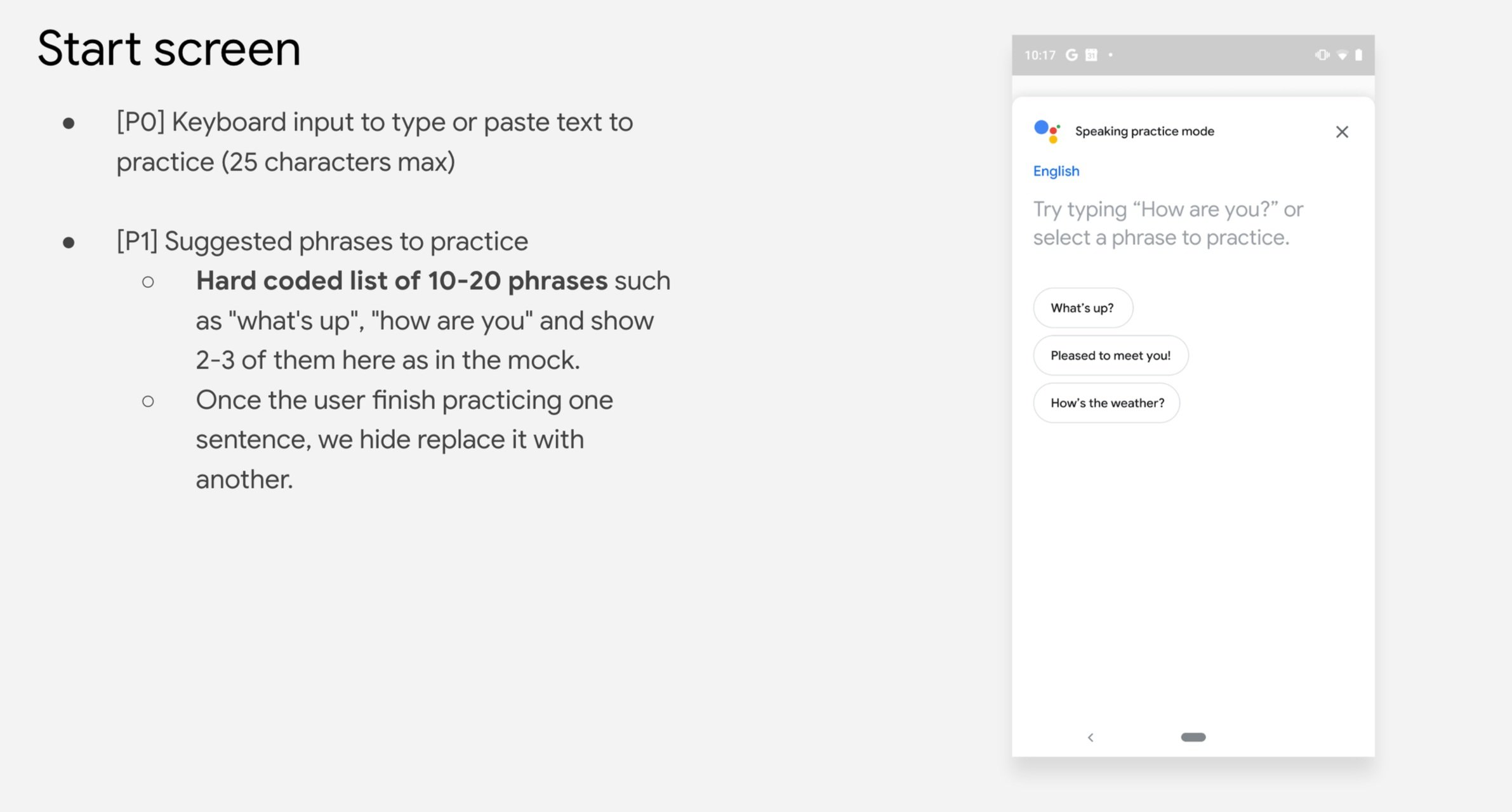

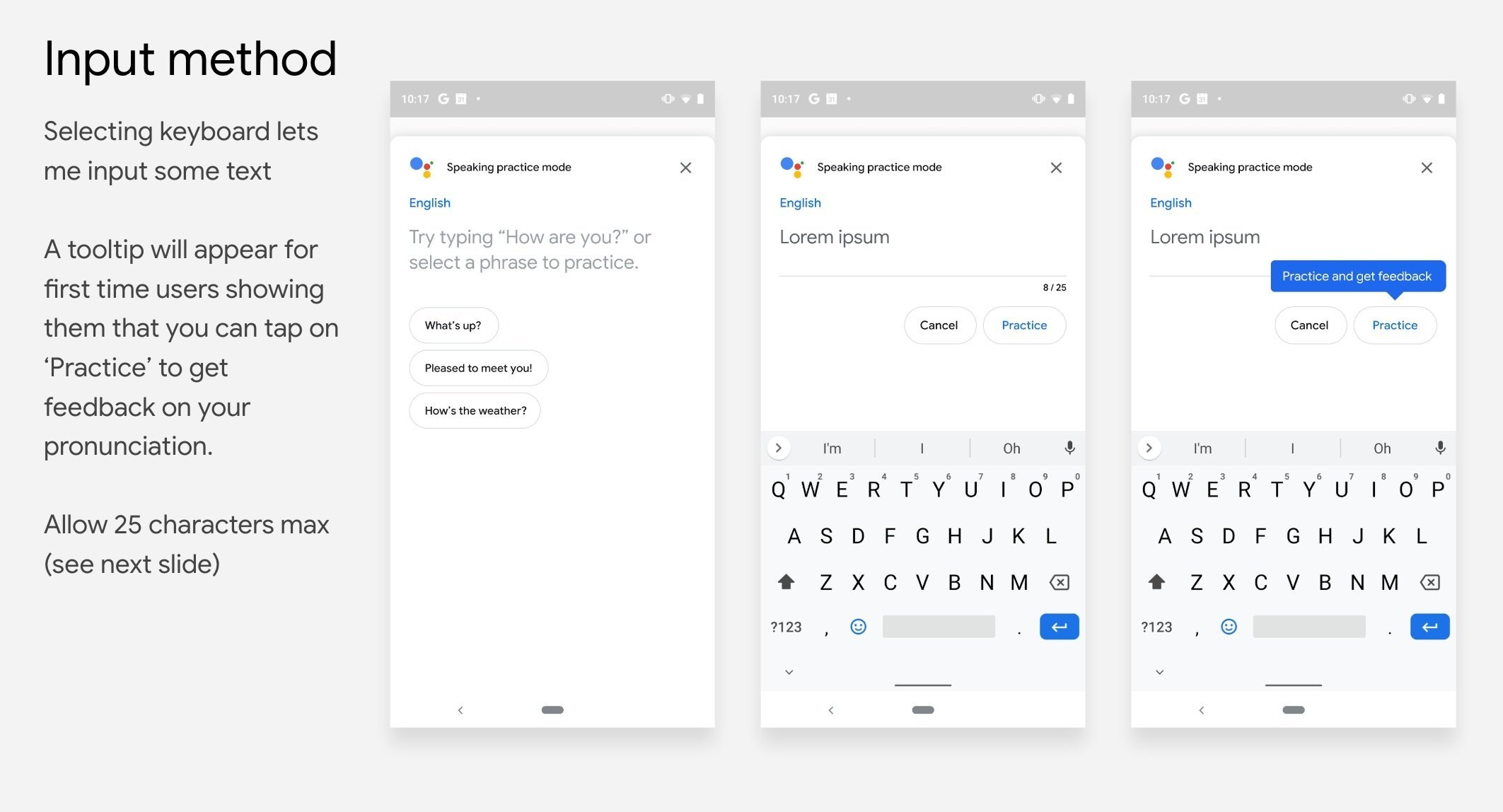

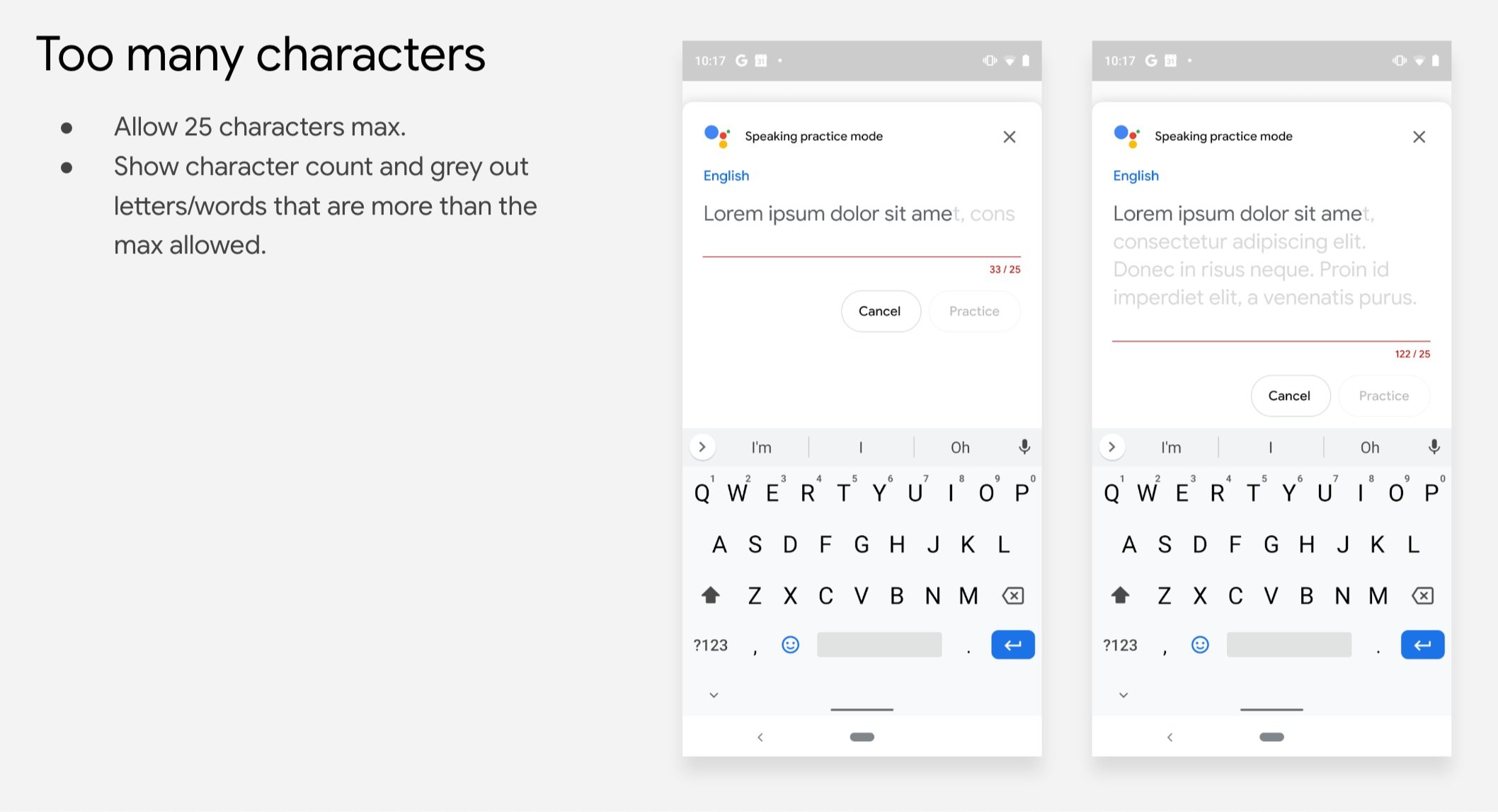

Assistant users in our translation/language products are often language learners looking for an opportunity to evaluate and improve their spoken English. Speaking Practice is an Assistant tool to evaluate users’ speech, where the text is either provided by the user or borrowed from another application (e.g. Interpreter Mode, one-shot translate, Chrome, YouTube, etc.)

Designed and developed an end-to-end design that allows users to practice suggested phrases or their own free speech.

Led and guided the project vision to incorporate pronunciation x-functionally on other features and surfaces using a custom half-sheet modal.